Elliot: the future of recruitment

I researched, designed, built and deployed a conversational AI agent to reduce candidate ghosting and drop off in recruitment. Along the way I learned context engineering, API orchestration and JavaScript integrations, as well as evolving a learning projects into a product with potential clients and a fledgling team.

Check out the brief summary below. For the full scoop on how AI is coming for your granola(!) scroll down the page or contact me.

Summary

Problem: Recruitment often breaks down at the very first touchpoint: candidate screening. I experienced this while navigating a job application process and where I was aksed to build a solution for this very pain. Elliot began as an initiative to reimagine how companies screen talent, especially in high-volume or specialized hiring contexts, using conversational AI to deliver a more human, responsive and joined up brand impression.

Process: I interviewed recruiters, role played conversation flows, built an agent and integrated it with live tools, testing with real users at every step. Recruiters found the AI particularly useful in navigating conversations around salary expectations. I identified meaningful friction in current screening flows, defined high-priority features and mapped a business case based on time-to-hire reduction and candidate engagement. What started as a solo project attracted a product strategist and a salesperson to continue the build.

Outcome: Elliot now lives as a functional AI screening agent demo on a dedicated site, with a battle-refined prompt and context engineering, built-in objection handling, live API integrations and a database for recruiter handoff. I led the product from insight to working MVP, facilitating research, building credibility with real users and designing the roadmap for what’s next. A concept that began as a response to personal pain has evolved into a viable product with commercial potential and a team behind it.

Full story below. Let’s do this?

problem

Starting With People, Not Prompts

Before designing anything, I started where the real problems live — in the day-to-day reality of recruiters and hiring managers. I reached out to people in my network and asked a simple question:

“What’s actually been slowing down your hiring process?”

I wanted firsthand frustration. What I uncovered wasn’t surprising, it was very human:

Scheduling is a mess, especially across time zones

Candidates drop off when there’s silence or lag

Recruitment teams are small, but their task load is massive

Delays caused by scheduling ping-pong, timezone mismatches or lack of recruiter bandwidth are costing companies great candidates

This validated the opportunity for intervention and became the foundation an AI voice agent designed to speed up early-stage candidate screening.

“How might we make recruitment feel joined up and personal, using AI in a way that feels natural?”

prototyping

Designing Conversations That Don’t Feel Robotic

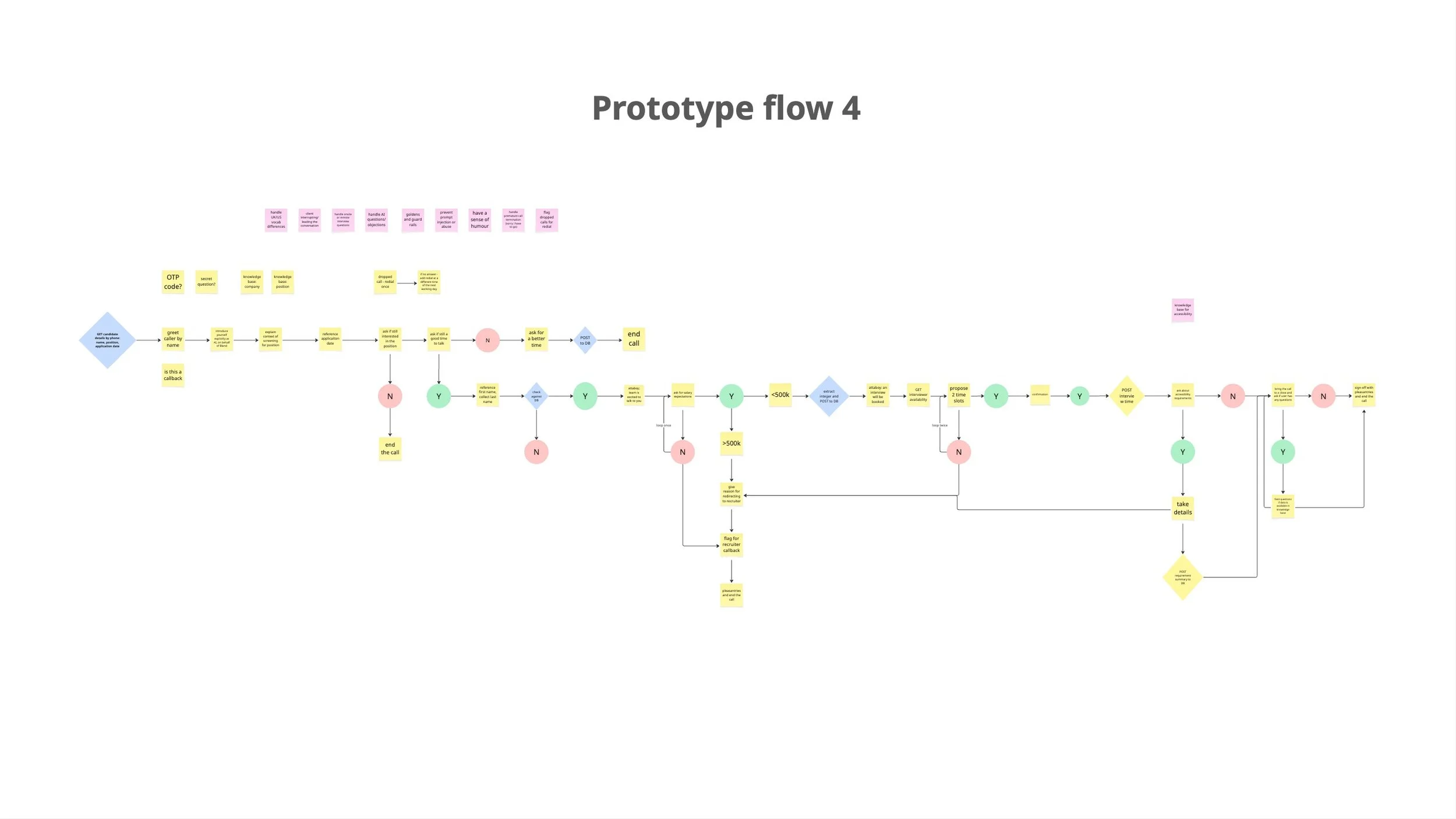

Once I understood the stakes, I turned to the brief. Before touching any tools, I sketched out the conversation flow, not as a chatbot script, but as an exploration of organically branching human dialogue.

Talking to myself would only get me so far however. To really explore where this conversation could go, I had to get out of the room and get out of my own head.

I roleplayed the conversation with real users and unsurprisingly discovered a lot of frictions and deviations I had not thought of myself. No amount of whiteboarding beats user testing.

Pivotal insights:

Testing round 1:

Users apply for a lot of jobs - add a summary reminder at the start of the call.

The AI may catch them at a time they are not available.

Candidates are anxious for an explicit confirmation of the next interview.

Testing round 2:

Asking for the name first raises phishing suspicions.

Confident candidates may lead the conversation - the AI has to stay on target.

Testing round 3:

Candidates may have accessibility requirements.

Some users may be curious or put off by talking to an AI.

Each iteration added emotional intelligence to the interaction. This still wasn’t about building a AI. I was creating blueprint for the real human experience in this context.

building

How to train your drAIgon

I chose the Bland AI developer platform to translate the prototype schema into a working conversational AI. Bland offers deep engineering and customisation options - from individual prompt engineering, loop conditions and APi integrations in every node, to system wide context engineering.

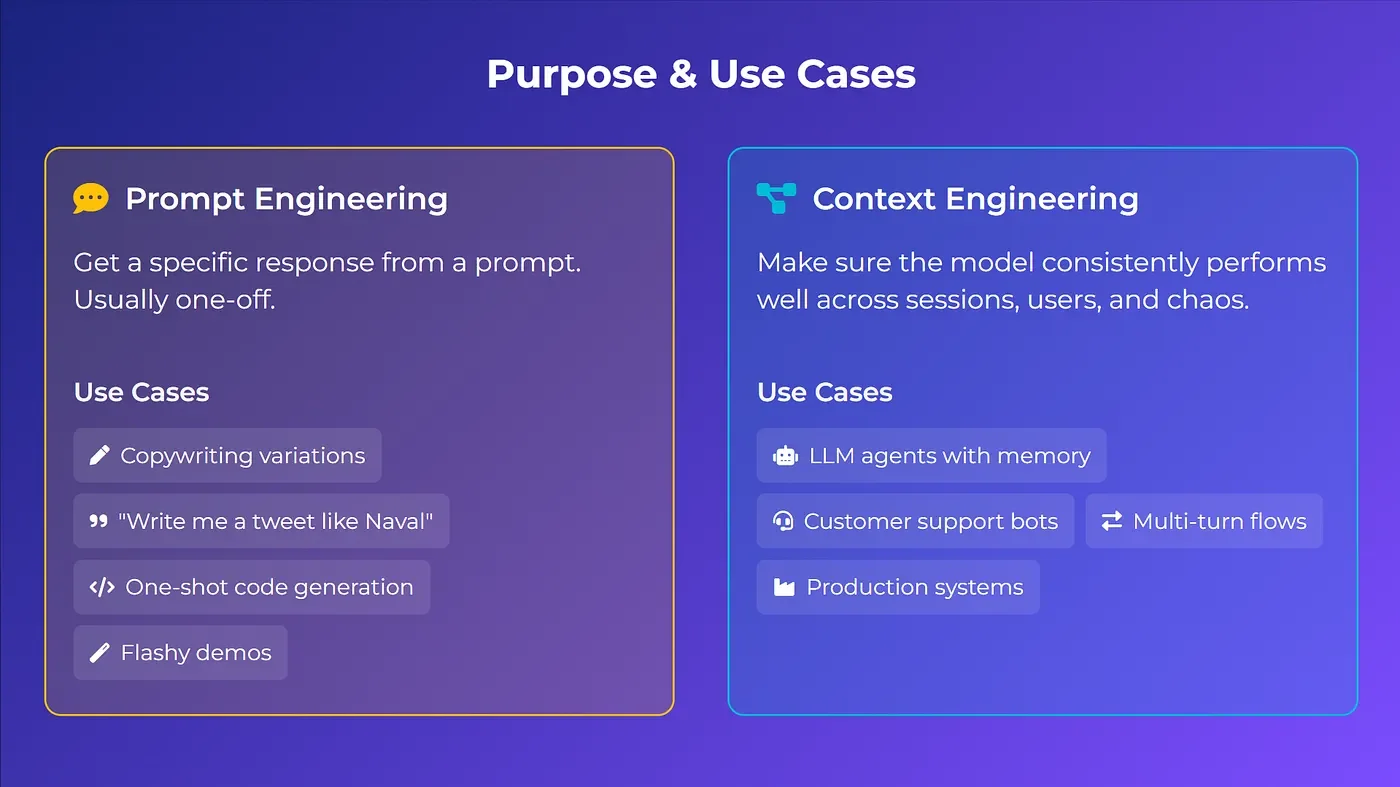

Sidebar: what is context engineering?

Prompt engineering gets you the first good output. Context engineering makes sure the 1000th output is still good.

The guiding principle in designing this AI was to make it sound as natural as possible. I dove deep into Bland’s documentation and studied numerous conversation bots in their developer library to learn what works and what doesn’t. LLM outputs can be unpredictable and tuning them is as much art as engineering. For business applications, I needed to create a tool that was consistently reliable and safe.

Each build of the agent was constantly tested, using both a chat interface and simulated phone calls. This allowed me to pinpoint decisions and expressions the AI produced and refine it using reinforcement. As the agent reached higher levels of accuracy and fluency, I reminded myself once again that testing it within the confines of my expectations was not enough and I had to release it into the wild, albeit on a short leash.

deploying

Beyond the Prototype

I could test the prototype face to face with users on my laptop, editing the key parts of the conversation with details like their name to make it feel personalised to them. However, this struck me as both inelegant and limiting. How could I adapt this for remote testing? How could I make it actually personalised?

I challenged myself to build a simulation of the entire user experience:

a simple website to simulate submitting a job application

a database to store the candidate details

scripting to initiate an AI call to the candidate

extraction of variables from the database to secure and personalise the call

integrating a calendar API to simulate booking the next meeting

I built the solution using React, Tailwind and JS using Cursor for the front end and a Supabase PostgresQL database. Hosted in GitHub and deployed using Vercel. This allowed me to test the solution with remote users, with no additional set up or guidance.

I used a mock calendar API to simulate booking a follow on interview. While the API provided numerical responses for errors, on successful booking it supplied a string as a response. To overcome this limitation, I structured the node so as to extract a variable from the response, which could then be used in a boolean condition to give an accurate, albeit still simulated, direction for the conversation to go.

This set up allowed me to run remote user testing that perfectly reflected real world use, not only in the design of the agent but its integration and deployment into client databases, tool and interfaces.

The value of this testing round became immediately obvious:

Testing round 4:

The agent can claim to be human, compromising user trust and safety.

Call screening services confuse and break the conversation flow.

To tackle the first challenge, I added a global node that would automatically handle AI queries with empathy, humour and honesty, as well as additional guard rails in the node prompts and global context to ensure that the agent did not mislead the user.

Working with call screening services would prove more challenging, especially as neither the iOS not Android feature was available on my personal devices. I found recordings of the call screening services in action which revealed how they worked:

ask the caller’s name and reason for calling

transcribe the response as a message to the recipient

wait for recipient to take over the call

The challenge was primarily human, then technical. By mapping out the caller journey I was able to design a naturalistic conversational experience that accounts for a scripted third speaker at the outset of the call.

After experimenting with various in platform tools, the best outcome was achieved by breaking up the initial greeting into two nodes: a quick scripted ‘hello’ followed by another node to properly greet the user. This allowed the space for a special screening handler to kick in between the two if the agent heard the scripted message, at which point it was instructed to give a succinct reason for the call and wait.

While testing with recruiters in my network, I spoke to the Chief of Staff of a fast growing company who needed to hire faster than their HR team could handle. Inadvertently I found my first client - customer development at its finest. I am currently working with 2 collaborators to bring this solution to market.

Still here?

Thanks or making it this far.

I’d love to know what you thought. Get in touch using the big orange button.